A possible technique for giving names to nameless satellitesby Charles Phillips

|

| It is fascinating to look at some populations, such as the satellites that are not in the “official” satellite catalog, and wonder why many of them are routinely tracked and yet they have not made it into the catalog. |

First, there are several catalogs of satellites. These are lists of satellites and information about them, and are maintained by different organizations. What are these catalogs? I have written a couple of articles that have appeared here that give some background, such as one about overlooked satellites (see “Acknowledging some overlooked satellites”, The Space Review, June 12, 2017), while another discusses why some satellites are in some catalogs and not others (see “Time for common sense with the satellite catalog”, The Space Review, April 10, 2017).

The US Air Force maintains the default world official satellite catalog and assigns official satellite numbers and “international designators,” which state what launch each object is associated with. The Air Force gets observations from many sites, radar and optical, around the world and uses them to generate and maintain orbital parameters for satellites. That is how they know which object is from which launch. That international designator is very important when a satellite reenters: the country that owns a satellite that reenters is responsible for any damage caused when it impacts the ground. Also, for satellite collisions, the country that owns a satellite that “causes” a collision is responsible for damage.

This information is available on Space-Track.org, a website run by the US government. Let’s call that catalog the “Space Track” catalog. But there are satellites available on that website that are not associated with a particular launch, both cataloged and uncataloged satellites.

There are other satellite catalogs (where observations of satellites are used to generate and update orbits) and some of these are available to the public. One is the so-called “Russian” catalog (actually an international effort run by the International Scientific Observing Network or ISON) and a separate catalog that just consists of a list of satellites and their orbits, maintained by a separate international network of observers (I am a part of this informal group.) Let’s call it the Observer catalog. We take educated guesses and track some satellites under the catalog number that we feel is correct and we track unknown satellites, assigning them “9x,xxx” numbers. Today there are commercial catalogs maintained by organizations with observing networks as well, but those usually are available only with a subscription. And there are some very useful catalogs that have good information but that don’t generate new orbital data from observations: they start with the information from other catalogs, such as Space Track, and often add background information.

It is fascinating to look at some populations, such as the satellites that are not in the “official” satellite catalog, and wonder why many of them are routinely tracked and yet they have not made it into the catalog. Or a few that are routinely tracked, are in the catalog, but still do not have international designators that associate them with a particular launch. It would be an interesting exercise to try to associate some of the satellites in various catalogs with particular launches.

There are a couple of ways to approach that effort, with knowledge of orbital mechanics we can start to note some facts. For this article we do not have enough space to go into orbital mechanics much but orbital descriptions consist of 1 six numbers plus the time that they are most accurate.[1] This could be the X, Y, and Z components of a vector from the center of the Earth to the satellite (the position vector) along with the rate of change of those components (the first derivative of X, of Y, and of Z), aka the velocity vector. Or we could use Keplerian elements which give us some more recognizable numbers to describe the orbit, such as eccentricity and inclination. Knowing the inclination allows us to visualize the part of the Earth that the satellite will pass over, while knowing the eccentricity allows us to visualize if the orbit is round or elongated. Due to the energy stored in the orbit, the inclination is a very useful number because changing it is very expensive.

There are two main orbital parameters that can help this technique to associate objects in space, the inclination and the right ascension of the ascending node (RAAN). The inclination is the angle between the orbit and the Earth’s Equator. When a satellite is launched it goes into a particular inclination and it takes a lot of energy to change that, so satellites that were launched together will have a very similar inclination unless they have thrusters that can change the orbit. The RAAN is the angle (measured in the Earth’s equatorial plane) that let’s us visualize what part of the Earth a satellite is passing over. A very low value for RAAN, for example, means a satellite passes over Greenwich, England.

This is not the right place to go into the details of orbital analysis, but when a satellite is launched into orbit there is usually a payload (the operational part) and an upper stage. There are also frequently pieces of debris like covers over telescopes. These objects all start out in the same orbit (same inclination and RAAN) but then natural forces will make them drift apart. One thing that makes the orbits drift apart is a 2 bulge in the Earth at the Equator: the Earth spins and that tends to make the surface bulge at the Equator.[2] The bulge exerts a force on the objects and torques the orbit, and the orbit drifts around the Earth; this is referred to as precession. The rates of drift for all objects from any launch should start out very close and slowly increase or decrease.

| The Satellite Catalog is unfortunately very inconsistent about what satellites have their orbits released: for many “national security” launches few payloads have orbital parameters shown in the Satellite Catalog but many of the orbits for associated upper stages and debris are available. |

So if you have several objects and wonder if they are from the same launch, they should first have very similar inclinations. Then, if you plot the RAAN for each object, their values will tell us if the orbit could ever be coplanar: from the original orbit they should drift very predictably around the Earth. This change of RAAN is caused by the equatorial bulge of the Earth and is greatly affected by the inclination and altitude of the object. What we can notice is: if you collect orbital parameters for several months and plot the RAAN values you should get an approximately straight line, indicating a consistent rate of precession. The slope of that line is the rate: objects from the same launch seem to have very similar rates and objects from different launches can be distinguished by plotting RAAN. This article is written to explain and justify that assertion. More work is needed to better quantify that assertion and it is under way. Let’s refer to this analysis of RAAN as the “RAAN Test.”

If we sort satellites by inclination we see that many cluster in specific inclinations, mainly due to their launch site but also due to their intended missions. Cape Canaveral, Florida, is at a latitude of about 28 degrees north while the Baikonur Cosmodrome is at a latitude of about 45 degrees north. Inclination will also tell us a lot about the mission of various satellites, but first let’s talk about the size and shape of orbits.

Many people think about orbits such as the one that the International Space Station (ISS) is in when they visualize such orbits. The ISS is in a low Earth orbit that is very circular; the ISS is always very close to the Earth’s surface. One parameter that describes the orbit is the eccentricity. The ISS has an eccentricity that is very low so we know that it is in a very circular orbit. But some orbits have a high eccentricity, such that the low point (perigee) and high point (apogee) of the orbit are very different. For this introduction to the RAAN Test, let’s look at a smaller population of very interesting satellites in what are often referred to as “Molniya” orbits. These are satellites where the perigee is low (often only a few hundred kilometers) while the apogee is very high (often at or near the geosynchronous altitude.) This is the classic “semi-synchronous” orbit that has an inclination of approximately 63 degrees; this is selected to control where the perigee is. It also causes the satellites to dwell over the high latitudes so they are useful for communications, but many of these satellites (that the US launches, at least) are in a group where the orbits are not publically released.

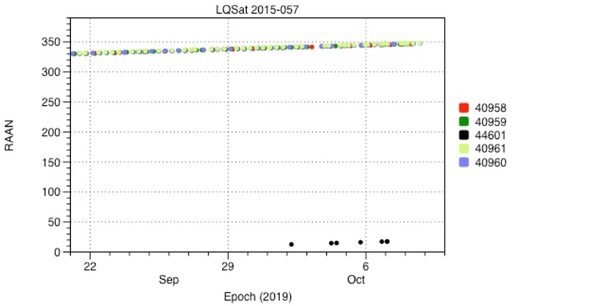

First, to check the assertion that a plot of RAAN could help associate satellites with particular launches, let’s plot a few known sets. Figure 1 is a fairly recent launch that gives us a good check on the contention. LQSat was launched on October 7, 2015, and there were five pieces cataloged from it. Four of them were cataloged right away: objects 40958, 40959, 40960, and 40961 which are payloads, and then 44601 which is a piece of debris. That last, nonconsecutive satellite number, 44601, indicated it must have been cataloged between September 26 and October 4, 2019. So, 44601 was presumably tracked for about four years before it was cataloged. The four payloads are still in the same plane but the debris is slightly to the “east” of it. But the slope of the RAAN precession is very similar, of course, since the payloads have a RAAN of nearly 360 and debris has a RAAN with a very low value: the debris actually crosses the Equator very close to the payloads. So by inspection we can say that the objects appear to have been correctly cataloged. The piece of debris has fewer points, this is because the orbit is updated less frequently. It is likely smaller and harder to track.

Figure 1: First Example Satellite Plot (LQSat) |

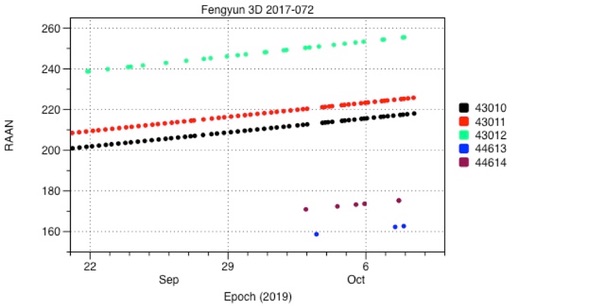

Figure 2 shows a more recent launch. Fengyun 3D was launched on November 14, 2017, and there are five pieces cataloged from that launch. Three were cataloged right away and two were cataloged between September 26 and October 4, 2019. (The Air Force cataloged a lot of old pieces of debris right about that time; they must have been trying to “clean up” a lot of old pieces from many earlier launches.) Two objects (43010 and 43011) are apparently payloads and so should be larger and more stable. 43012 was cataloged as an upper stage and they often are less stable and sometimes smaller, so it has been updated less often. Two years after launch, objects 44613 and 44614 were cataloged, and they are updated less often than the other pieces. They appear to be pieces of debris which would be smaller, less stable, and a bit harder to track. However, their RAAN precession rate appears to match that of the other pieces from that launch. Their apogees and perigees are all at about 800 kilometers, so they are all well above the appreciable atmosphere.

Figure 2: Second Example Satellite Plot (Fengyun 3D) |

So this test appears to have some validity: it is a little surprising that objects maintain a similar precession rate even years after launch, when their altitudes have changed. Of course, if you plot objects with similar inclinations and similar altitudes, the RAAN precession rate will be similar but we will soon see that there is a lot of variation in that rate. Again I chose satellites with orbital altitudes of about 800 kilometers for this example. To have real confidence in this test we would need to measure the rates and have an analytical model that explained them, and I am now working on that. My early results will not fit into this article.

Let’s look at the population of some “unknown” satellites and plot some parameters to try to associate satellites with unknown origins to satellites with known origins. What can we base guesses on?

The Satellite Catalog is unfortunately very inconsistent about what satellites have their orbits released: for many “national security” launches few payloads have orbital parameters shown in the Satellite Catalog but many of the orbits for associated upper stages and debris are available. Looking at what is available tells us a lot about those objects that are not available. There is a lot to note about that, but it will have to wait for a future article. This inconsistency is probably due to inadequate training and indifferent supervision.

| This RAAN test may have some utility in determining which launches “unknown” satellites are associated with. In some ways this technique is a solution in search of a problem! |

So if we look in the Satellite Catalog, we will see which objects are officially associated with which launch, and if some orbits are released and some are not. If we apply the RAAN Test we should see that the available orbits have very comparable inclinations, RAANs, and drift rates. So we can plot RAAN for a series of missions with classified payloads and objects associated with a launch should have linear sets of values.

In the satellite catalog there are a number of payloads where their orbital parameters are not shown, yet from launch announcements we can guess a firm inclination. The observer community is tracking a number of objects in various inclinations, and they have assigned presumed satellite numbers based on a search after a launch. Even if the payload’s orbit is not available, the upper stages or debris helps with assignments.

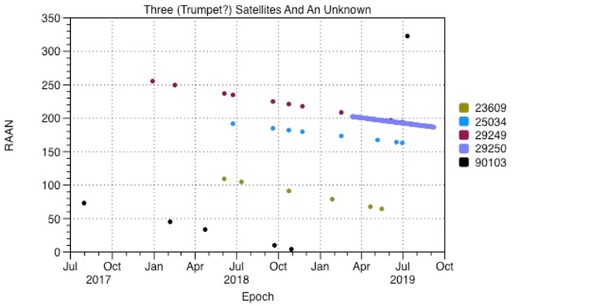

Let’s look at what the Observer community feels is the “Trumpet” electronics intelligence satellites. Let’s plot the unknown 90103 with three satellites that the Observer catalog claims are Trumpets.

Let’s add a table (with inclination) since this is getting complex:

Table 1 Three Trumpet Satellites And An Unknown

| Object Number | Name | Orbit Available? | Inclination |

|---|---|---|---|

| 23609 | USA 112 (Trumpet 2) | Observer catalog | 63.1 |

| 25034 | USA 136 (Trumpet 3) | Observer catalog | 63.4 |

| 29249 | USA 184 (Improved Trumpet) | Observer catalog | 62.9 |

| 29250 | USA 184 upper stage | Space Track | 64.2 |

| 90103 | Unknown | Observer catalog | 63.3 |

The values for the payloads and the unknown comes from the Observer catalog and the values for the upper stage (object 29250) comes from the Air Force satellite catalog.

Figure 3: Three (Trumpet?) Satellites Plotted Together |

Just by inspection, here we can see that all of these objects, launched years apart, seem to have consistent RAAN precession rates, so perhaps the Observer list can be compared to the public satellite catalog.

The upper stage for USA 184, object 29250, looks more consistent with the “presumed” payload from another launch, object 25034. The precession rate for 29249 and 29250 appears to be somewhat different. The precession rates for the three assumed payloads, objects 23609, 25034, and 29249, appear to be unique. Looking at the precession rate for 90103, it looks very similar to object 29249. Right now this is just done by inspection—not a very dependable method but good for explaining the concept.

If you add other known objects at similar inclinations and altitudes, some of them form nearly parallel lines to these here. Perhaps this indicates that 90103 could be associated with one of them? That is yet to be shown conclusively.

As we said earlier, the payload and upper stage started out together in the same orbit and so they will both have very similar inclinations. They started out having the same size orbit but the payload is probably a dense object while the used upper stage is an empty cylinder—it will be less massive and will more quickly have its orbit be affected by forces such as atmospheric drag as it goes through perigee. When objects (payloads, upper stages, debris) are put into orbit they have a lot of energy stored in the orbit, the orbits (due to conservation of energy) need to be “perturbed” before they will change. There are many perturbations: the friction due to passing through the atmosphere, the uneven gravitational pull of the Earth, the gravitational pull of the Moon, etc.

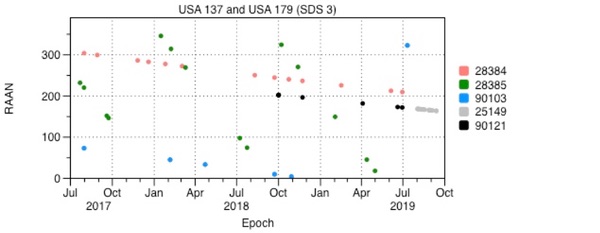

Let’s apply the RAAN Test to more satellites that were tracked as “unknown” satellites by the observer community. Another example is presumed Satellite Data System launches. We can plot RAAN values for the known objects from a flight and the values for unknown satellites, again in the observer catalog they have 9x,xxx numbers.

We still need a table so we can sort out what is what:

Table 2 SDS 3 Satellites and Unknowns

| Object Number | Name | Orbit Available? | Inclination |

|---|---|---|---|

| 25148 | USA 137 | No | Unknown |

| 25149 | USA 137 r/b | Space Track | 62.8 |

| 28384 | USA 179 | Observer catalog | 63.5 |

| 28385 | USA 179 r/b | Space Track | 57 |

| 90103 | n/a | Observer catalog | 63.3 |

Figure 4: Two (SDS-3?) Satellites And An Unknown |

Let’s go back to the USA 137 (which the Observer community feels is a Satellite Data System version 3 payload or SDS-3) launch and plot items from it with another satellite which we think is another SDS-3. Here object 25149 is the upper stage from USA 137 (according to Space Track) and object 28384 is USA 179 according to the Observers. Object 28385 is the Space Track object identified as the USA 179 upper stage. The Observer community tracked an unknown, 90121, for a long time and recently the orbit for object 25149 was released—the two objects appear to be the same. The Observer community is not tracking the payload USA 137 that we know of. Here we see that the RAAN for object 90103 appears relatively parallel (in this view) with both 25149 and 28384.

One thing that jumps out right away is very distinct 28385, which has a precession rate that is very different even from object 25149 which should be an upper stage in a very similar orbit. The inclinations for those two objects (28384 and 28385) are also six degrees different, this is a large difference.

Just what is going on is yet to be determined. Space Track has made mistakes before but it should be very hard to mis-catalog an upper stage like this. It is also odd to see a large object like an upper stage to be updated as seldom as 28385 is.

There is currently only one unknown satellite at about 63 degrees, and there are a few potential launches it could have come from. There are more launches (such as USA 142, which we presume is DSP F-19), and more unknowns at about 28 degrees, but that must wait for a future article due to the length of this one. There are also several unknowns at even lower inclinations but they also must wait. This technique also is being applied to objects from the ISON catalog and I plan to look at some “analyst” satellites from Space Track. Since the Air Force is currently releasing more orbital parameters, we should find out what some of the unknowns are. So other unknowns, like 90121 was, may be shown to be missing satellites from the satellite catalog.

Still, when we plot the values of RAAN for various satellites, it does give us some insight into what objects appear to be associated with what launches. And it raises some questions. This article doesn't have space for an analytical discussion or for some of the other plots, but this RAAN test may have some utility in determining which launches “unknown” satellites are associated with. In some ways this technique is a solution in search of a problem!

I would love to hear from anyone who would like to work with me on finalizing the analytical parts of this technique and submitting it as a formal article on orbital mechanics.

Acknowledgement

This article could not have been written without help from three colleagues. Mike Marston wrote the critical piece of software that was used to sort and group satellites. He is far better at writing C code than I am. The plots were produced with a very well designed application, DataGraph. Most people make plots using another application but DataGraph is far faster and more intuitive. John Dormer also helped me with C code and analysis and I could not have gotten these results without his help.

Endnotes

- Fundamentals Of Astrodynamics, Bate, Mueller, and White, Dover Publications 1971. Page 58.

- Ibid. Page 156.

Note: we are temporarily moderating all comments subcommitted to deal with a surge in spam.